I started experimenting with LLMs last year, in an attempt to create a tool that generated subject lines for emails based on email content.

Started with a basic wrapper around OpenAI. Graduated further into understanding the ecosystem of tools available, experimenting with HuggingFace transformers, further into self-hosting LLMs like Bert, Roberta, NLLB, for some NLP experiments, training and fine-tuning these models, Langchain, StreamLit, diving a little bit into Pinecone.

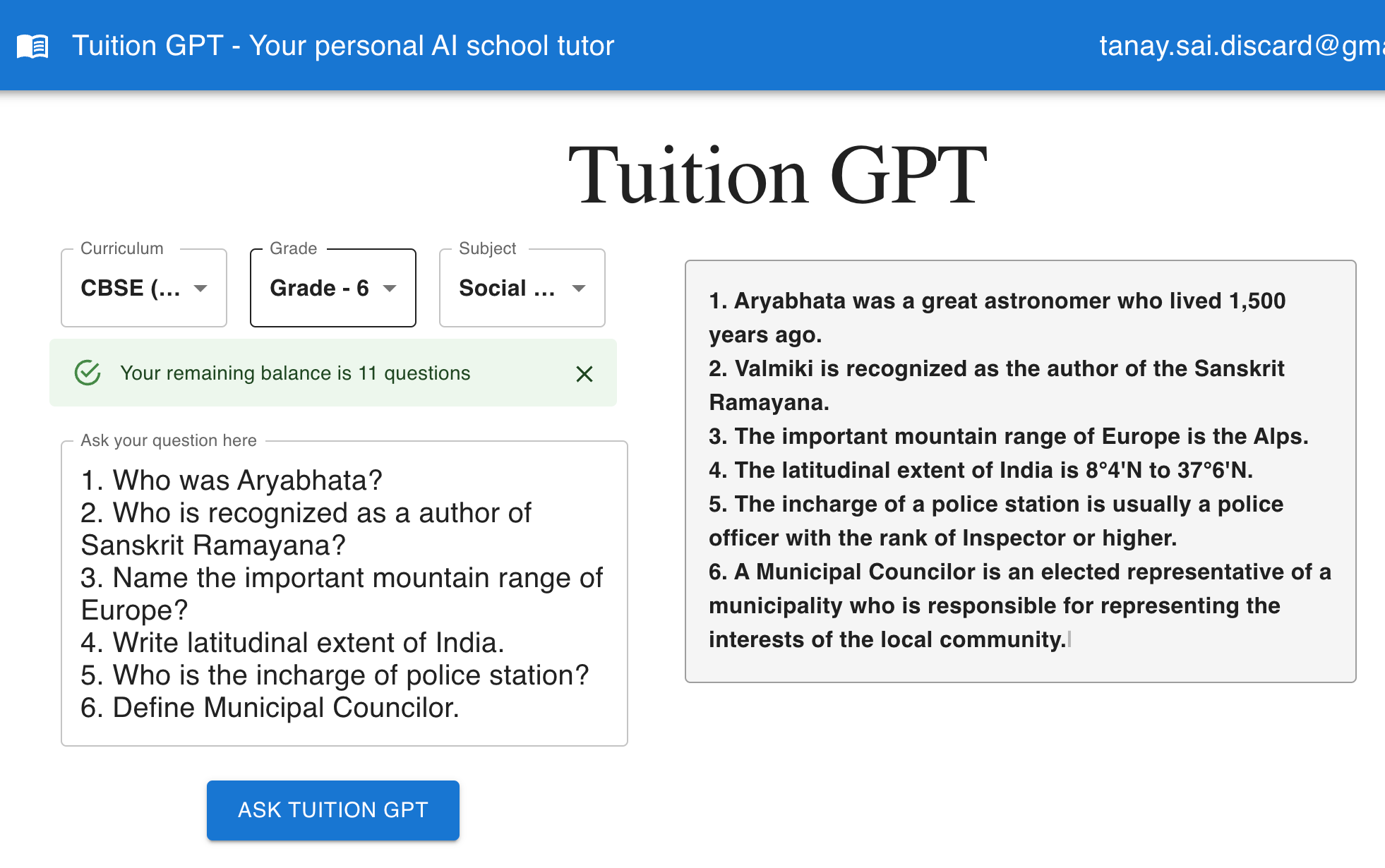

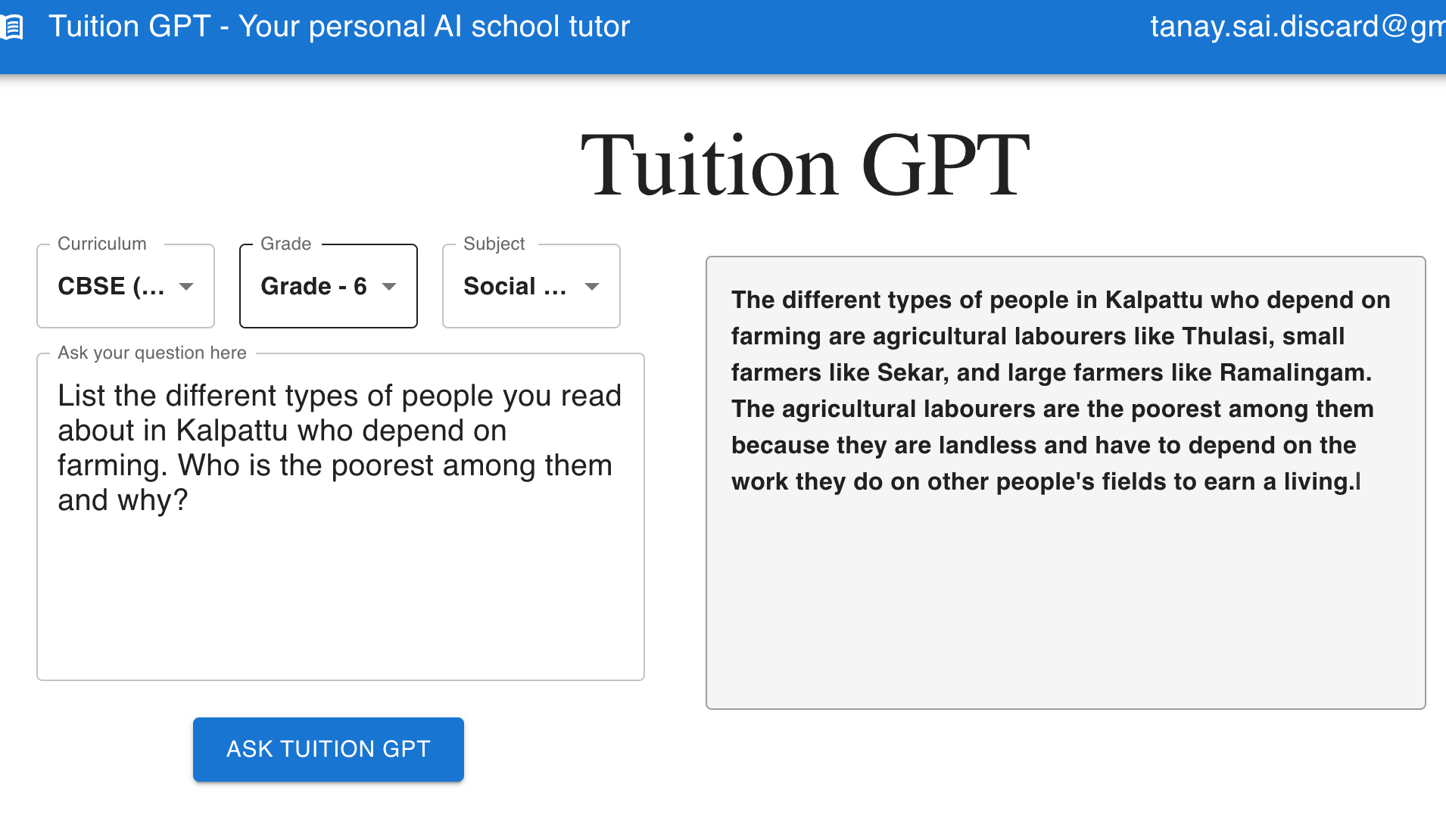

Built this last weekend - https://tuition-gpt.com/ - an interface to an LLM that does a retrieval augmented generation leveraging my kid's school text books. Initially started with 6th grade books for him, but later extended it to all textbooks that I could find online, and built a basic MaterialUI-based UI to allow him to interact with the model.

I was pleasantly surprised by the output and it seems to be able to do its job so far, and has helped him with 3 sessions of homework so far.

Give it a spin and see if it works for you!